A) Create multiple IAM service roles for Systems Manager so that the ssm.amazonaws.com service can execute the AssumeRole operation on every instance. Register the role on a per-resource level to enable the creation of a service token. Perform managed-instance activation with the newly created service role attached to each managed instance.

B) Create an IAM service role for Systems Manager so that the ssm.amazonaws.com service can execute the AssumeRole operation. Register the role to enable the creation of a service token. Perform managed-instance activation with the newly created service role.

C) Using previously obtained activation codes and activation IDs, download and install the SSM Agent on the hybrid servers, and register the servers or virtual machines on the Systems Manager service. Hybrid instances will show with an "mi-" prefix in the SSM console.

D) Using previously obtained activation codes and activation IDs, download and install the SSM Agent on the hybrid servers, and register the servers or virtual machines on the Systems Manager service. Hybrid instances will show with an "i-" prefix in the SSM console as if they were provisioned as a regular Amazon EC2 instance.

E) Run AWS Config to create a list of instances that are unpatched and not compliant. Create an instance scheduler job, and through an AWS Lambda function, perform the instance patching to bring them up to compliance.

G) A) and C)

Correct Answer

verified

Correct Answer

verified

Multiple Choice

An AWS CodePipeline pipeline has implemented a code release process. The pipeline is integrated with AWS CodeDeploy to deploy versions of an application to multiple Amazon EC2 instances for each CodePipeline stage. During a recent deployment, the pipeline failed due to a CodeDeploy issue. The DevOps team wants to improve monitoring and notifications during deployment to decrease resolution times. What should the DevOps Engineer do to create notifications when issues are discovered?

A) Implement AWS CloudWatch Logs for CodePipeline and CodeDeploy, create an AWS Config rule to evaluate code deployment issues, and create an Amazon SNS topic to notify stakeholders of deployment issues.

B) Implement AWS CloudWatch Events for CodePipeline and CodeDeploy, create an AWS Lambda function to evaluate code deployment issues, and create an Amazon SNS topic to notify stakeholders of deployment issues.

C) Implement AWS CloudTrail to record CodePipeline and CodeDeploy API call information, create an AWS Lambda function to evaluate code deployment issues, and create an Amazon SNS topic to notify stakeholders of deployment issues.

D) Implement AWS CloudWatch Events for CodePipeline and CodeDeploy, create an Amazon Inspector assessment target to evaluate code deployment issues, and create an Amazon SNS topic to notify stakeholders of deployment issues.

F) A) and B)

Correct Answer

verified

Correct Answer

verified

Multiple Choice

When running a playbook on a remote target host you receive a Python error similar to "[Errno 13] Permission denied: `/home/nick/.ansible/tmp'. What would be the most likely cause of this problem?

A) The user's home or `.ansible' directory on the Ansible system is not writeable by the user running the play.

B) The specified user does not exist on the remote system.

C) The user running `ansible-playbook' must run it from their own home directory.

D) The user's home or `.ansible' directory on the Ansible remote host is not writeable by the user running the play

F) A) and C)

Correct Answer

verified

Correct Answer

verified

Multiple Choice

A company wants to implement a CI/CD pipeline for building and testing its mobile apps. A DevOps Engineer has been given the following requirements: Use AWS CodePipeline to orchestrate the workflow. Test the application on real devices. Trigger a notification. Stage the application binary on a production bucket in a different account. Make the application binary publicly accessible. Which sequence of actions should the Engineer perform in the pipeline to meet the requirements?

A) Use AWS CodeCommit as the code source and AWS CodeDeploy to compile and package the application. Use CodeDeploy to deploy the application binary to an AWS Lambda function for testing. Use a third-party library on AWS Lambda to simulate the device platform. Allow a Lambda role to upload to the production Amazon S3 bucket. Make the binary publicly accessible. Trigger notifications using Amazon SNS.

B) Use GitHub as the code source and AWS Lambda to compile and package the application. Use another Lambda function to run unit tests and deliver the application binary to a development bucket. Use the binary from the development bucket and install the application on a personal device for testing. Deliver the binary to the production bucket after approval. Trigger notifications using Amazon SNS.

C) Use an Amazon S3 bucket as the code source and AWS CodeBuild to compile and package the application. Use AWS CodeDeploy to deploy the application binary to a device farm for testing. Deliver the binary to the production S3 bucket. Use an S3 bucket policy to allow public read on the production S3 bucket. Trigger notifications using an Amazon CloudWatch Events rule with Amazon SNS.

D) Use AWS CodeCommit as the code source and AWS CodeBuild to compile and package the application. Invoke an AWS Lambda function that uploads the application binary to a device farm for testing. Deliver the binary to the production Amazon S3 bucket. Use an S3 bucket policy to allow public read on the production S3 bucket. Trigger notifications by using an Amazon CloudWatch Events rule.

F) A) and C)

Correct Answer

verified

Correct Answer

verified

Multiple Choice

Fill the blanks: __________ helps us track AWS API calls and transitions, _________ helps to understand what resources we have now, and ________ allows auditing credentials and logins.

A) AWS Config, CloudTrail, IAM Credential Reports

B) CloudTrail, IAM Credential Reports, AWS Config

C) CloudTrail, AWS Config, IAM Credential Reports

D) AWS Config, IAM Credential Reports, CloudTrail

F) A) and B)

Correct Answer

verified

Correct Answer

verified

Multiple Choice

A highly regulated company has a policy that DevOps Engineers should not log in to their Amazon EC2 instances except in emergencies. If a DevOps Engineer does log in, the Security team must be notified within 15 minutes of the occurrence. Which solution will meet these requirements?

A) Install the Amazon Inspector agent on each EC2 instance. Subscribe to Amazon CloudWatch Events notifications. Trigger an AWS Lambda function to check if a message is about user logins. If it is, send a notification to the Security team using Amazon SNS.

B) Install the Amazon CloudWatch agent on each EC2 instance. Configure the agent to push all logs to Amazon CloudWatch Logs and set up a CloudWatch metric filter that searches for user logins. If a login is found, send a notification to the Security team using Amazon SNS.

C) Set up AWS CloudTrail with Amazon CloudWatch Logs. Subscribe CloudWatch Logs to Amazon Kinesis. Attach AWS Lambda to Kinesis to parse and determine if a log contains a user login. If it does, send a notification to the Security team using Amazon SNS.

D) Set up a script on each Amazon EC2 instance to push all logs to Amazon S3. Set up an S3 event to trigger an AWS Lambda function, which triggers an Amazon Athena query to run. The Athena query checks for logins and sends the output to the Security team using Amazon SNS.

F) A) and B)

Correct Answer

verified

Correct Answer

verified

Multiple Choice

You have deployed an application to AWS which makes use of Autoscaling to launch new instances. You now want to change the instance type for the new instances. Which of the following is one of the action items to achieve this deployment?

A) Use Elastic Beanstalk to deploy the new application with the new instance type

B) Use Cloudformation to deploy the new application with the new instance type

C) Create a new launch configuration with the new instance type

D) Create new EC2 instances with the new instance type and attach it to the Autoscaling Group

F) All of the above

Correct Answer

verified

Correct Answer

verified

Multiple Choice

A company is using AWS for an application. The Development team must automate its deployments. The team has set up an AWS CodePipeline to deploy the application to Amazon EC2 instances by using AWS CodeDeploy after it has been built using the AWS CodeBuild service. The team would like to add automated testing to the pipeline to confirm that the application is healthy before deploying it to the next stage of the pipeline using the same code. The team requires a manual approval action before the application is deployed, even if the test is successful. The testing and approval must be accomplished at the lowest costs, using the simplest management solution. Which solution will meet these requirements?

A) Add a manual approval action after the last deploy action of the pipeline. Use Amazon SNS to inform the team of the stage being triggered. Next, add a test action using CodeBuild to do the required tests. At the end of the pipeline, add a deploy action to deploy the application to the next stage.

B) Add a test action after the last deploy action of the pipeline. Configure the action to use CodeBuild to perform the required tests. If these tests are successful, mark the action as successful. Add a manual approval action that uses Amazon SNS to notify the team, and add a deploy action to deploy the application to the next stage.

C) Create a new pipeline that uses a source action that gets the code from the same repository as the first pipeline. Add a deploy action to deploy the code to a test environment. Use a test action using AWS Lambda to test the deployment. Add a manual approval action by using Amazon SNS to notify the team, and add a deploy action to deploy the application to the next stage.

D) Add a test action after the last deployment action. Use a Jenkins server on Amazon EC2 to do the required tests and mark the action as successful if the tests pass. Create a manual approval action that uses Amazon SQS to notify the team and add a deploy action to deploy the application to the next stage.

F) All of the above

Correct Answer

verified

Correct Answer

verified

Multiple Choice

A DevOps Engineer at a startup cloud-based gaming company has the task formalizing deployment strategies. The strategies must meet the following requirements: Use standard Git commands, such as git clone and git push for the code repository. Management tools should maximize the use of platform solutions where possible. Deployment packages must be immutable and in the form of Docker images. How can the Engineer meet these requirements?

A) Use AWS CodePipeline to trigger a build process when software is pushed to a self-hosted GitHub repository. CodePipeline will use a Jenkins build server to build new Docker images. CodePipeline will deploy into a second target group in Amazon ECS behind an Application Load Balancer. Cutover will be managed by swapping the listener rules on the Application Load Balancer.

B) Use AWS CodePipeline to trigger a build process when software is pushed to a private GitHub repository. CodePipeline will use AWS CodeBuild to build new Docker images. CodePipeline will deploy into a second target group in Amazon ECS behind an Application Load Balancer. Cutover will be managed by swapping the listener rules on the Application Load Balancer.

C) Use a Jenkins pipeline to trigger a build process when software is pushed to a private GitHub repository. AWS CodePipeline will use AWS CodeBuild new Docker images. CodePipeline will deploy into a second target group in Amazon ECS behind an Application Load Balancer. Cutover will be managed by swapping the listener rules on the Application Load Balancer.

D) Use AWS CodePipeline to trigger a build process when software is pushed to an AWS CodeCommit repository CodePipeline will use an AWS CodeBuild build server to build new Docker images. CodePipeline will deploy into a second target group in a Kubernetes Cluster hosted on Amazon EC2 behind an Application Load Balancer. Cutover will be managed by swapping the listener rules on the Application Load Balancer.

F) A) and C)

Correct Answer

verified

Correct Answer

verified

Multiple Choice

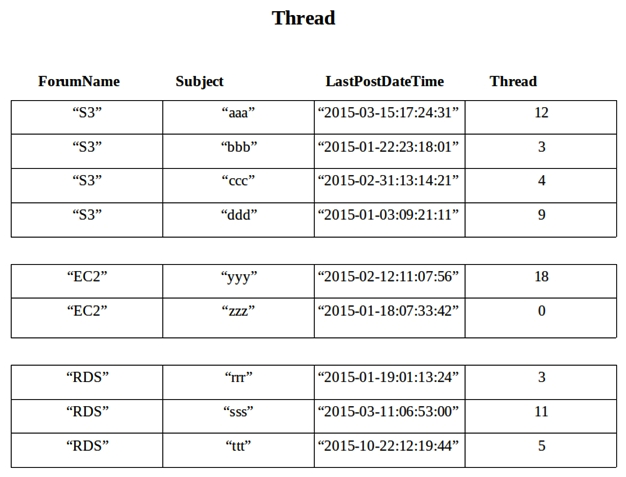

A company wants to use Amazon DynamoDB for maintaining metadata on its forums. See the sample data set in the image below.  A DevOps Engineer is required to define the table schema with the partition key, the sort key, the local secondary index, projected attributes, and fetch operations. The schema should support the following example searches using the least provisioned read capacity units to minimize cost. -Search within ForumName for items where the subject starts with 'a'. -Search forums within the given LastPostDateTime time frame. -Return the thread value where LastPostDateTime is within the last three months. Which schema meets the requirements?

A DevOps Engineer is required to define the table schema with the partition key, the sort key, the local secondary index, projected attributes, and fetch operations. The schema should support the following example searches using the least provisioned read capacity units to minimize cost. -Search within ForumName for items where the subject starts with 'a'. -Search forums within the given LastPostDateTime time frame. -Return the thread value where LastPostDateTime is within the last three months. Which schema meets the requirements?

A) Use Subject as the primary key and ForumName as the sort key. Have LSI with LastPostDateTime as the sort key and fetch operations for thread.

B) Use ForumName as the primary key and Subject as the sort key. Have LSI with LastPostDateTime as the sort key and the projected attribute thread.

C) Use ForumName as the primary key and Subject as the sort key. Have LSI with Thread as the sort key and the projected attribute LastPostDateTime.

D) Use Subject as the primary key and ForumName as the sort key. Have LSI with Thread as the sort key and fetch operations for LastPostDateTime.

F) B) and D)

Correct Answer

verified

Correct Answer

verified

Multiple Choice

The project you are working on currently uses a single AWS CloudFormation template to deploy its AWS infrastructure, which supports a multi-tier web application. You have been tasked with organizing the AWS CloudFormation resources so that they can be maintained in the future, and so that different departments such as Networking and Security can review the architecture before it goes to Production. How should you do this in a way that accommodates each department, using their existing workflows?

A) Organize the AWS CloudFormation template so that related resources are next to each other in the template, such as VPC subnets and routing rules for Networking and security groups and IAM information for Security.

B) Separate the AWS CloudFormation template into a nested structure that has individual templates for the resources that are to be governed by different departments, and use the outputs from the networking and security stacks for the application template that you control

C) Organize the AWS CloudFormation template so that related resources are next to each other in the template for each department's use, leverage your existing continuous integration tool to constantly deploy changes from all parties to the Production environment, and then run tests for validation.

D) Use a custom application and the AWS SDK to replicate the resources defined in the current AWS CloudFormation template, and use the existing code review system to allow other departments to approve changes before altering the application for future deployments.

F) C) and D)

Correct Answer

verified

Correct Answer

verified

Multiple Choice

During metric analysis, your team has determined that the company's website is experiencing response times during peak hours that are higher than anticipated. You currently rely on Auto Scaling to make sure that you are scaling your environment during peak windows. How can you improve your Auto Scaling policy to reduce this high response time? (Choose two.)

A) Push custom metrics to CloudWatch to monitor your CPU and network bandwidth from your servers, which will allow your Auto Scaling policy to have better fine-grain insight.

B) Increase your Auto Scaling group's number of max servers.

C) Create a script that runs and monitors your servers; when it detects an anomaly in load, it posts to an Amazon SNS topic that triggers Elastic Load Balancing to add more servers to the load balancer.

D) Push custom metrics to CloudWatch for your application that include more detailed information about your web application, such as how many requests it is handling and how many are waiting to be processed.

E) Update the CloudWatch metric used for your Auto Scaling policy, and enable sub-minute granularity to allow auto scaling to trigger faster.

G) All of the above

Correct Answer

verified

Correct Answer

verified

Multiple Choice

A DevOps Engineer needs to back up sensitive Amazon S3 objects that are stored within an S3 bucket with a private bucket policy using the S3 cross-region replication functionality. The objects need to be copied to a target bucket in a different AWS Region and account. Which actions should be performed to enable this replication? (Choose three.)

A) Create a replication IAM role in the source account.

B) Create a replication IAM role in the target account.

C) Add statements to the source bucket policy allowing the replication IAM role to replicate objects.

D) Add statements to the target bucket policy allowing the replication IAM role to replicate objects.

E) Set AccessControlTranslation.OwnerOverride to true in the replication configuration and add a statement to the target bucket policy allowing the replication IAM role to override object ownership.

F) Set AccessControlTranslation.Owner to destination in the replication configuration and add a statement to the target bucket policy allowing the replication IAM role to override object ownership.

H) A) and B)

Correct Answer

verified

Correct Answer

verified

Multiple Choice

You are responsible for a large-scale video transcoding system that operates with an Auto Scaling group of video transcoding workers. The Auto Scaling group is configured with a minimum of 750 Amazon EC2 instances and a maximum of 1000 Amazon EC2 instances. You are using Amazon SQS to pass a message containing the URI for a video stored in Amazon S3 to the transcoding workers. An Amazon CloudWatch alarm has notified you that the queue depth is becoming very large. How can you resolve the alarm without the risk of increasing the time to transcode videos? (Choose two.)

A) Create a second queue in Amazon SQS.

B) Adjust the Amazon CloudWatch alarms for a higher queue depth.

C) Create a new Auto Scaling group with a launch configuration that has a larger Amazon EC2 instance type.

D) Add an additional Availability Zone to the Auto Scaling group configuration.

E) Change the Amazon CloudWatch alarm so that it monitors the CPU utilization of the Amazon EC2 instances rather than the Amazon SQS queue depth.

F) Adjust the Auto Scaling group configuration to increase the maximum number of Amazon EC2 instances.

H) A) and E)

Correct Answer

verified

Correct Answer

verified

Multiple Choice

Consider the portion of a CloudTrail log file below. Which type of event is being captured? "eventTime":"2016-07-16T17:35:32Z", "eventSource":"signin.amazonaws.com", "eventName":"ConsoleLogin", "awsRegion":"us-west-1", "sourceIPAddress":"192.1.2.10", ...

A) AWS console sign-in

B) AWS log off

C) AWS error

D) AWS deployment

F) A) and C)

Correct Answer

verified

Correct Answer

verified

Multiple Choice

A mobile application running on eight Amazon EC2 instances is relying on a third-party API endpoint. The third-party service has a high failure rate because of limited capacity, which is expected to be resolved in a few weeks. In the meantime, the mobile application developers have added a retry mechanism and are logging failed API requests. A DevOps Engineer must automate the monitoring of application logs and count the specific error messages; if there are more than 10 errors within a 1-minute window, the system must issue an alert. How can the requirements be met with MINIMAL management overhead?

A) Install the Amazon CloudWatch Logs agent on all instances to push the application logs to CloudWatch Logs. Use metric filters to count the error messages every minute, and trigger a CloudWatch alarm if the count exceeds 10 errors.

B) Install the Amazon CloudWatch Logs agent on all instances to push the access logs to CloudWatch Logs. Create CloudWatch Events rule to count the error messages every minute, and trigger a CloudWatch alarm if the count exceeds 10 errors.

C) Install the Amazon CloudWatch Logs agent on all instances to push the application logs to CloudWatchLogs. Use a metric filter to generate a custom CloudWatch metric that records the number of failures and triggers a CloudWatch alarm if the custom metric reaches 10 errors in a 1-minute period.

D) Deploy a custom script on all instances to check application logs regularly in a cron job. Count the number of error messages every minute, and push a data point to a custom. CloudWatch metric. Trigger a CloudWatch alarm if the custom metric reaches 10 errors in a 1-minute period.

F) All of the above

Correct Answer

verified

Correct Answer

verified

Multiple Choice

Your organization has decided to implement a third-party configuration management tool that uses a master server from which nodes pull configuration. You have built a custom base Amazon Machine Image that already has the third-party configuration management agent installed. You want to use the same base AMI in Development, Test and Production environments, each of which has its own master server. How should you configure your Amazon EC2 instances to register with the correct master server on launch?

A) Create a tag for all instances that specifies their environment. When launching instances, provide an Amazon EC2 UserData script that gets this tag by querying the MetaData Service and registers the agent with the master.

B) Use Amazon CloudFormation to describe your environment. Configure an input parameter for the master server hostname/address, and use this parameter within an Amazon EC2 UserData script that registers the agent with the master.

C) Create a script on your third-party configuration management master server that queries the Amazon EC2 API for new instances and registers them with it.

D) Use Amazon Simple Workflow Service to automate the process of registering new instances with your master server. Use an Environment tag in Amazon EC2 to register instances with the correct master server.

F) None of the above

Correct Answer

verified

Correct Answer

verified

Multiple Choice

A DevOps Engineer must track the health of a stateless RESTful service sitting behind a Classic Load Balancer. The deployment of new application revisions is through a Cl/CD pipeline. If the service's latency increases beyond a defined threshold, deployment should be stopped until the service has recovered. Which of the following methods allow for the QUICKEST detection time?

A) Use Amazon CloudWatch metrics provided by Elastic Load Balancing to calculate average latency. Alarm and stop deployment when latency increases beyond the defined threshold.

B) Use AWS Lambda and Elastic Load Balancing access logs to detect average latency. Alarm and stop deployment when latency increases beyond the defined threshold.

C) Use AWS CodeDeploy's MinimumHealthyHosts setting to define thresholds for rolling back deployments. If these thresholds are breached, roll back the deployment.

D) Use Metric Filters to parse application logs in Amazon CloudWatch Logs. Create a filter for latency. Alarm and stop deployment when latency increases beyond the defined threshold.

F) B) and C)

Correct Answer

verified

Correct Answer

verified

Multiple Choice

A DevOps Engineer needs to deploy a scalable three-tier Node.js application in AWS. The application must have zero downtime during deployments and be able to roll back to previous versions. Other applications will also connect to the same MySQL backend database. The CIO has provided the following guidance for logging: Centrally view all current web access server logs. Search and filter web and application logs in near-real time. Retain log data for three months. How should these requirements be met?

A) Deploy the application using AWS Elastic Beanstalk. Configure the environment type for Elastic Load Balancing and Auto Scaling. Create an Amazon RDS MySQL instance inside the Elastic Beanstalk stack. Configure the Elastic Beanstalk log options to stream logs to Amazon CloudWatch Logs. Set retention to 90 days.

B) Deploy the application on Amazon EC2. Configure Elastic Load Balancing and Auto Scaling. Use an Amazon RDS MySQL instance for the database tier. Configure the application to store log files in Amazon S3. Use Amazon EMR to search and filter the data. Set an Amazon S3 lifecycle rule to expire objects after 90 days.

C) Deploy the application using AWS Elastic Beanstalk. Configure the environment type for Elastic Load Balancing and Auto Scaling. Create the Amazon RDS MySQL instance outside the Elastic Beanstalk stack. Configure the Elastic Beanstalk log options to stream logs to Amazon CloudWatch Logs. Set retention to 90 days.

D) Deploy the application on Amazon EC2. Configure Elastic Load Balancing and Auto Scaling. Use an Amazon RDS MySQL instance for the database tier. Configure the application to load streaming log data using Amazon Kinesis Data Firehouse into Amazon ES. Delete and create a new Amazon ES domain every 90 days.

F) A) and B)

Correct Answer

verified

Correct Answer

verified

Multiple Choice

A DevOps team needs to query information in application logs that are generated by an application running multiple Amazon EC2 instances deployed with AWS Elastic Beanstalk. Instance log streaming to Amazon CloudWatch Logs was enabled on Elastic Beanstalk. Which approach would be the MOST cost-efficient?

A) Use a CloudWatch Logs subscription to trigger an AWS Lambda function to send the log data to an Amazon Kinesis Data Firehose stream that has an Amazon S3 bucket destination. Use Amazon Athena to query the log data from the bucket.

B) Use a CloudWatch Logs subscription to trigger an AWS Lambda function to send the log data to an Amazon Kinesis Data Firehose stream that has an Amazon S3 bucket destination. Use a new Amazon Redshift cluster and Amazon Redshift Spectrum to query the log data from the bucket.

C) Use a CloudWatch Logs subscription to send the log data to an Amazon Kinesis Data Firehose stream that has an Amazon S3 bucket destination. Use Amazon Athena to query the log data from the bucket.

D) Use a CloudWatch Logs subscription to send the log data to an Amazon Kinesis Data Firehose stream that has an Amazon S3 bucket destination. Use a new Amazon Redshift cluster and Amazon Redshift Spectrum to query the log data from the bucket.

F) B) and D)

Correct Answer

verified

Correct Answer

verified

Showing 21 - 40 of 610

Related Exams